在大数据炒的和房子一样的时代,spark 可谓无人不知,无人不晓。spark做为开源的Apache计算引擎,可以做低延时、分布式的计算,具备处理大数据的能力,另外还内置机器学习。spark在前一段时间支持了R语言,RStudio又推出了新包 sparklyr ,为了避免落伍,我赶紧瞄一眼sparklyr是何方神圣

sparklyr是R与spark的一个接口,可以将复杂的计算任务交给spark处理,做统计或者展现的话再用R处理,提高效率。可贵的是,sparklyr结合dplyr处理数据的函数,这样就不用去学其他的编程语言了

连接spark

1 | library(devtools) |

查看可用的spark版本

1 | library(sparklyr) |

下载一个spark版本

1 | spark_install(version = "1.6.2", hadoop_version = "2.6") |

本地连接spark

1 | sc = spark_connect(master = "local") |

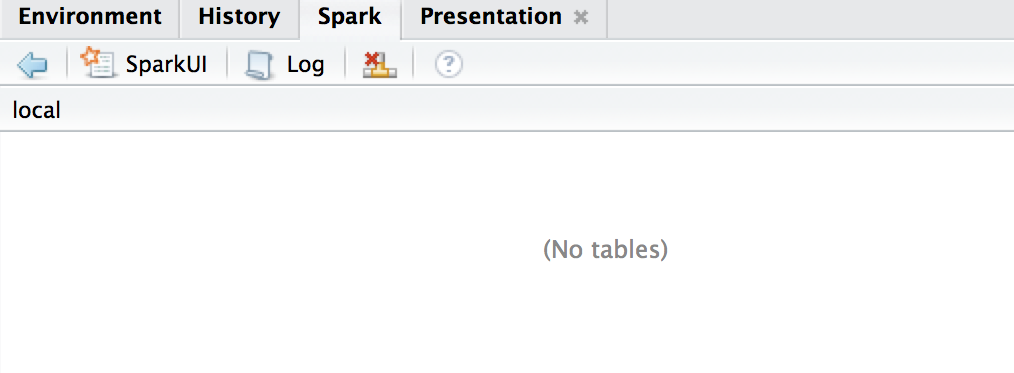

RStudio也提供了spark连接的界面

几个重要的函数

- 将文件的数据读入spark:

spark_read_csv、spark_read_json、spark_read_parquet。以csv为例

1 | spark_read_csv(sc,name = "mydata",path = "xx.csv",header = TRUE,charset = "GB18030") |

- 将本地R数据集copy to spark

1 | library(nycflights13) |

- 将数据从spark复制到R内存

1 | mydata1 = collect(flights) |

- 将结果保存为临时数据集

1 | compute(mydata1,"flights") |

- 将dplyr的语句转化为spark sql

1 | bestworst = flights %>% |

- 将结果写入HDFS

1 | spark_write_parquet(tbl,"hdfs://hdfs.company.org:9000/hdfs-path/data") |

意外发现了dplyr里的抽样函数,可设置replace = TRUE

1 | sample_n(data,10) # 随机取10条 |

spark机器学习的部分有时间再看,未完待续……